In today’s healthtech landscape, artificial intelligence (AI) promises faster diagnoses, smarter workflows, and more personalized patient care. But these powerful algorithms often rely on vast troves of data—including sensitive health information—to learn and improve. Amid that progress, a pressing question emerges: Is AI violating your privacy? In healthcare, this question has profound implications for patient trust and regulatory compliance under HIPAA.

Is AI Violating Your Privacy?

At its core, AI systems process data to recognize patterns and make predictions. When those systems ingest Protected Health Information (PHI)—from electronic health records to medical imaging—any misstep in data handling can expose patient details. Privacy concerns range from models inadvertently memorizing identifiable data to unsecured third-party APIs sharing PHI without proper safeguards. Understanding these risks is the first step toward ensuring AI benefits patients without compromising their privacy.

How HIPAA Applies to AI in Healthcare

HIPAA’s Privacy and Security Rules extend to any technology that stores, processes, or transmits PHI. Under 45 CFR § 164.502, covered entities and their business associates must protect individually identifiable health information, and 45 CFR § 164.312 requires technical safeguards for ePHI. When a hospital or clinic uses an AI vendor to analyze patient data, that vendor becomes a business associate and must sign a Business Associate Agreement (BAA). The BAA legally binds the AI provider to HIPAA’s requirements, making clear the responsibilities for securing patient data.

Common AI Privacy Risks

AI models trained on PHI can inadvertently store identifiable details in their parameters, a vulnerability known as “model memorization.” Without proper de-identification, attackers may reconstruct patient data from the model itself. Inference attacks pose another threat: malicious actors can probe AI APIs to deduce sensitive attributes about patients. Additionally, when AI relies on third-party cloud services or open-source components, any misconfiguration in those dependencies can leak PHI. These scenarios demonstrate that AI’s power comes with the need for vigilant data protection.

Technical Safeguards for HIPAA-Compliant AI

To ensure AI does not violate patient privacy, healthcare organizations must implement robust technical controls. De-identification is the first line of defense; removing direct identifiers and applying statistical techniques to mask indirect ones can greatly reduce re-identification risk (HHS De-Identification Guidance). Encryption of data both at rest and in transit—using AES-256 and TLS 1.2 or higher—is essential to protect PHI throughout the AI pipeline. Access to training data and models should be restricted via role-based permissions and multi-factor authentication, with all interactions logged and monitored for suspicious activity in a centralized Security Information and Event Management (SIEM) system.

Administrative & Organizational Controls

Technical measures alone are not enough. A comprehensive risk assessment helps identify vulnerabilities in AI workflows and prioritize mitigation strategies. Governance frameworks must document data sources, model training processes, and approval workflows. Workforce training ensures that data scientists and clinicians understand PHI handling requirements. Finally, BAAs with any AI vendors or cloud platforms complete the compliance picture by formalizing responsibilities for breach notification and auditing.

Best Practices for AI Deployment in Healthcare

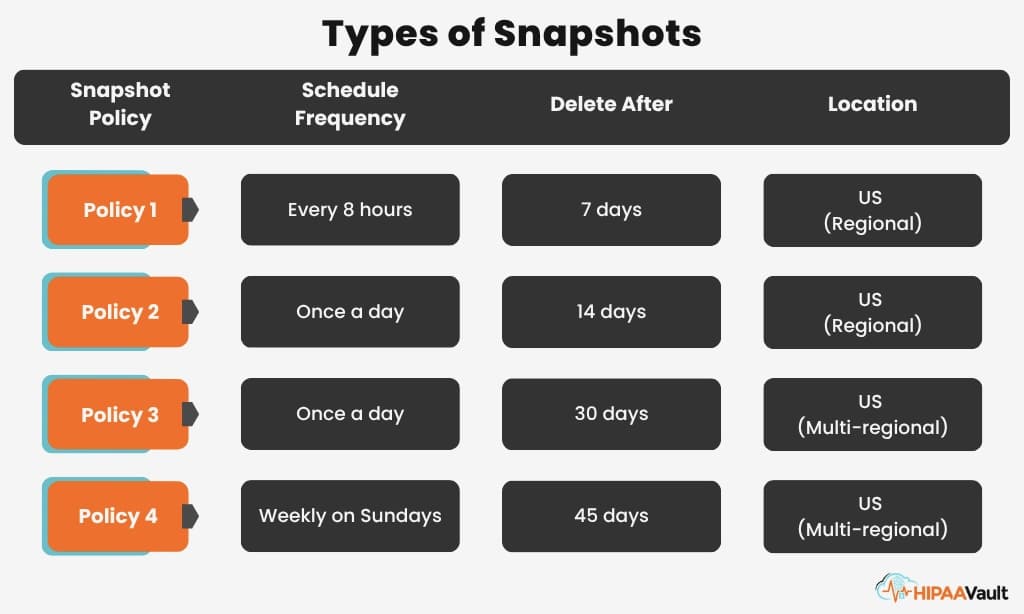

Deploying AI safely requires continuous vigilance. Regularly test models for bias and unintended data leakage. Implement data minimization—only collect the PHI necessary for each use case—and enforce data retention policies to purge unnecessary records. Whenever possible, favor on-device or federated learning approaches that avoid centralized storage of raw patient data. And establish clear incident-response procedures so that any suspected privacy breach triggers immediate investigation and patient notification within the timelines mandated by HIPAA.

How HIPAA Vault Supports Secure, Compliant AI

HIPAA Vault’s AI-ready cloud solutions provide a turnkey environment for healthcare organizations to develop and deploy AI models without risking privacy violations. Our platform offers fully managed encryption at rest and in transit, fine-grained access controls, continuous security monitoring, and detailed audit logging. We sign BAAs for all our services, ensuring legal compliance. Our compliance experts also assist with risk assessments, governance frameworks, and incident-response planning—so you can innovate confidently, knowing your AI initiatives meet HIPAA’s rigorous standards.

Conclusion: Balancing Innovation and Privacy

AI holds transformative potential for healthcare, but it must be deployed responsibly. By understanding how to safeguard PHI through de-identification, encryption, access controls, and rigorous governance, organizations can harness AI’s benefits without compromising patient privacy. If you’re ready to build AI solutions under HIPAA’s framework, partner with HIPAA Vault to secure your data, streamline compliance, and maintain patient trust. Explore HIPAA Vault’s AI-Compliant Infrastructure