Artificial intelligence (AI) is reshaping healthcare. From improving diagnostic accuracy with imaging analysis to automating clinical documentation and enhancing patient engagement through intelligent chatbots, AI promises faster, more efficient care. But with this innovation comes a pressing concern: does AI comply with HIPAA?

The short answer is yes—AI can be HIPAA-compliant, but only when implemented with the appropriate technical, administrative, and contractual safeguards. AI systems that access or process Protected Health Information (PHI) must meet the requirements outlined in the HIPAA Privacy and Security Rules. This article explores what that means in practice and how to build and deploy AI applications that align with federal compliance standards.

The Rise of AI in Healthcare: Promise Meets Regulation

AI has already made inroads across multiple facets of healthcare. Clinical decision support, natural language processing, and operational optimization are just a few areas where machine learning models are helping reduce costs and improve outcomes. According to a 2023 report by McKinsey & Company, generative AI alone could deliver up to $360 billion annually in value across the U.S. healthcare sector by streamlining administrative workflows, accelerating R&D, and supporting clinical diagnostics¹.

Yet, this rapid integration raises complex data privacy and compliance questions. AI systems often require access to large datasets—many of which contain sensitive health information. If these systems handle PHI, they must operate in accordance with HIPAA’s data protection mandates. Organizations that ignore these requirements expose themselves to potential fines, lawsuits, and reputational harm.

Understanding HIPAA’s Scope for AI Deployments

The Health Insurance Portability and Accountability Act (HIPAA) governs how covered entities (like hospitals, clinics, and insurers) and their business associates (such as SaaS providers, developers, and cloud vendors) manage PHI. The Privacy Rule (45 CFR §164.502) defines what constitutes PHI and when it may be disclosed, while the Security Rule (45 CFR §164.312) outlines the safeguards that must be in place to protect electronic PHI (ePHI).

When AI tools are trained on or interact with PHI—for example, when summarizing patient notes or generating personalized treatment recommendations—they fall within HIPAA’s scope. In such cases, organizations must ensure that AI systems encrypt data in transit and at rest, enforce access controls, log all access attempts, and limit exposure to only the “minimum necessary” data. In addition, a Business Associate Agreement (BAA) must be signed between any AI vendor and the covered entity using their solution. Failure to secure PHI under these guidelines could result in penalties of up to $1.5 million per violation category per year, according to the U.S. Department of Health and Human Services (HHS).

How to Make AI HIPAA-Compliant

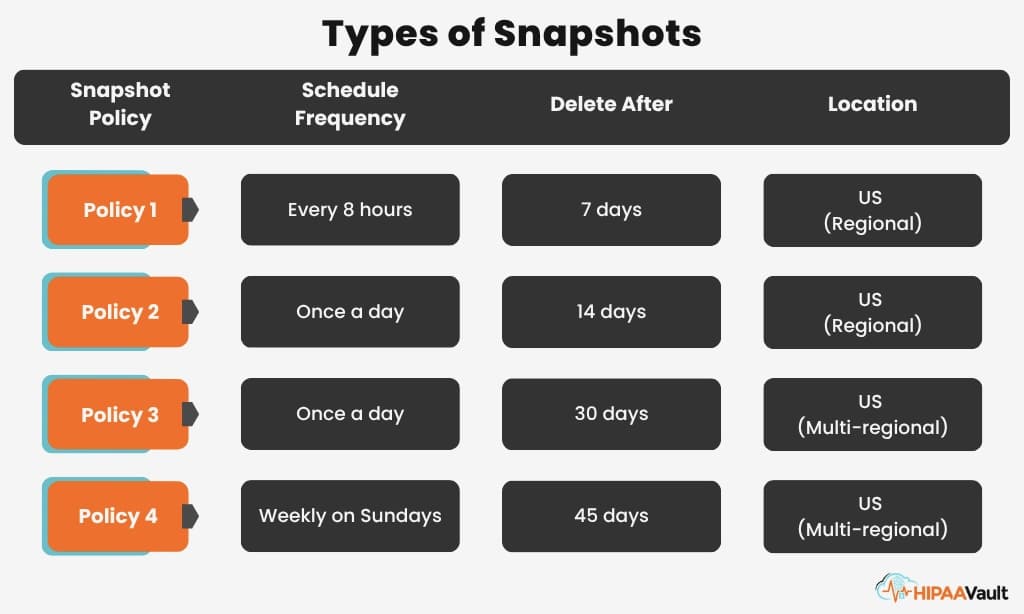

Deploying HIPAA-compliant AI systems starts with infrastructure. All AI applications that touch PHI must run on secure, compliant environments. This includes encrypted servers, intrusion detection systems, firewalls, multi-factor authentication, and regular patching. For instance, HIPAA Vault’s fully managed cloud platform provides hardened virtual machines, geo-redundant backups, SIEM logging, and continuous threat monitoring—along with a signed BAA to cover compliance responsibilities³.

Developers must also be careful when using training data. If an AI model is trained on PHI, that data must be de-identified according to the Safe Harbor or Expert Determination methods outlined by the HHS. If de-identification is not possible, the model and infrastructure must meet full HIPAA compliance. Moreover, external vendors offering AI services must also be HIPAA-compliant and willing to sign a BAA. Using APIs from non-compliant vendors—even unintentionally—can constitute a serious violation. HHS has clarified that any third-party processing of PHI must be governed by a signed BAA and appropriate security controls (HHS).

The National Institute of Standards and Technology (NIST) provides a helpful AI Risk Management Framework that healthcare organizations can use to evaluate privacy, fairness, and security risks when deploying AI systems⁵. This includes evaluating the potential for data leakage, model bias, and unintended PHI exposure during training or inference.

Common AI Use Cases Under HIPAA

AI’s use in healthcare spans a wide range of applications—but not all of them pose the same compliance risks. One common implementation is AI-assisted clinical documentation. Tools that automatically generate physician notes from audio or text inputs must handle PHI with strict safeguards, ensuring that storage, transmission, and retrieval processes are fully encrypted and access is limited to authorized users.

Another growing use case is patient engagement through AI chatbots or virtual assistants. These tools may gather symptoms, health histories, or even insurance details—all considered PHI under HIPAA. If the chatbot’s backend stores or transmits this data without proper encryption or operates on non-compliant infrastructure, the organization could face serious regulatory consequences. HIPAA-compliant chatbot solutions must log interactions, limit access, and verify user identities through secure channels.

Similarly, predictive analytics models used to assess patient risk, flag sepsis indicators, or suggest interventions based on clinical data must undergo thorough risk assessments. If trained on identifiable PHI, these models should incorporate technical controls to prevent data memorization and ensure that any retained data is anonymized or secured. In each of these scenarios, the developer and the healthcare organization share responsibility for maintaining compliance throughout the data lifecycle.

HIPAA Vault’s Role in Secure AI Deployments

HIPAA Vault simplifies the complex challenge of deploying AI in healthcare by offering a full suite of HIPAA-compliant hosting and support services. Developers and healthcare teams can run AI applications on pre-hardened servers equipped with full disk encryption, centralized logging, and real-time intrusion detection—all managed by HIPAA compliance experts. This infrastructure supports both model training and inference while maintaining alignment with the HIPAA Security Rule.

Beyond hosting, HIPAA Vault offers expert consulting to help organizations assess the compliance posture of their AI initiatives. Whether evaluating a machine learning model’s training data, reviewing an API integration, or preparing for a third-party audit, our team provides actionable guidance rooted in real-world healthcare standards. We also streamline BAA management, reducing legal friction and accelerating time to deployment for new AI tools.

Organizations that partner with HIPAA Vault benefit from continuous compliance monitoring and proactive risk mitigation, allowing them to focus on innovation instead of regulatory overhead. With a proven track record supporting healthcare developers and SaaS vendors, HIPAA Vault enables secure, compliant AI at scale.

Final Thoughts: AI and HIPAA Are Compatible—with the Right Safeguards

AI holds extraordinary promise for improving healthcare outcomes and efficiency—but it must be deployed with care. HIPAA compliance is not a barrier to innovation; rather, it provides a blueprint for responsibly handling sensitive health data. By securing infrastructure, evaluating model risks, and partnering with HIPAA-compliant vendors, healthcare organizations can embrace AI without compromising patient privacy or regulatory integrity.

Looking to launch your next AI healthcare app securely?

🚀 Contact HIPAA Vault to build, host, and scale your AI tools in a HIPAA-compliant cloud environment